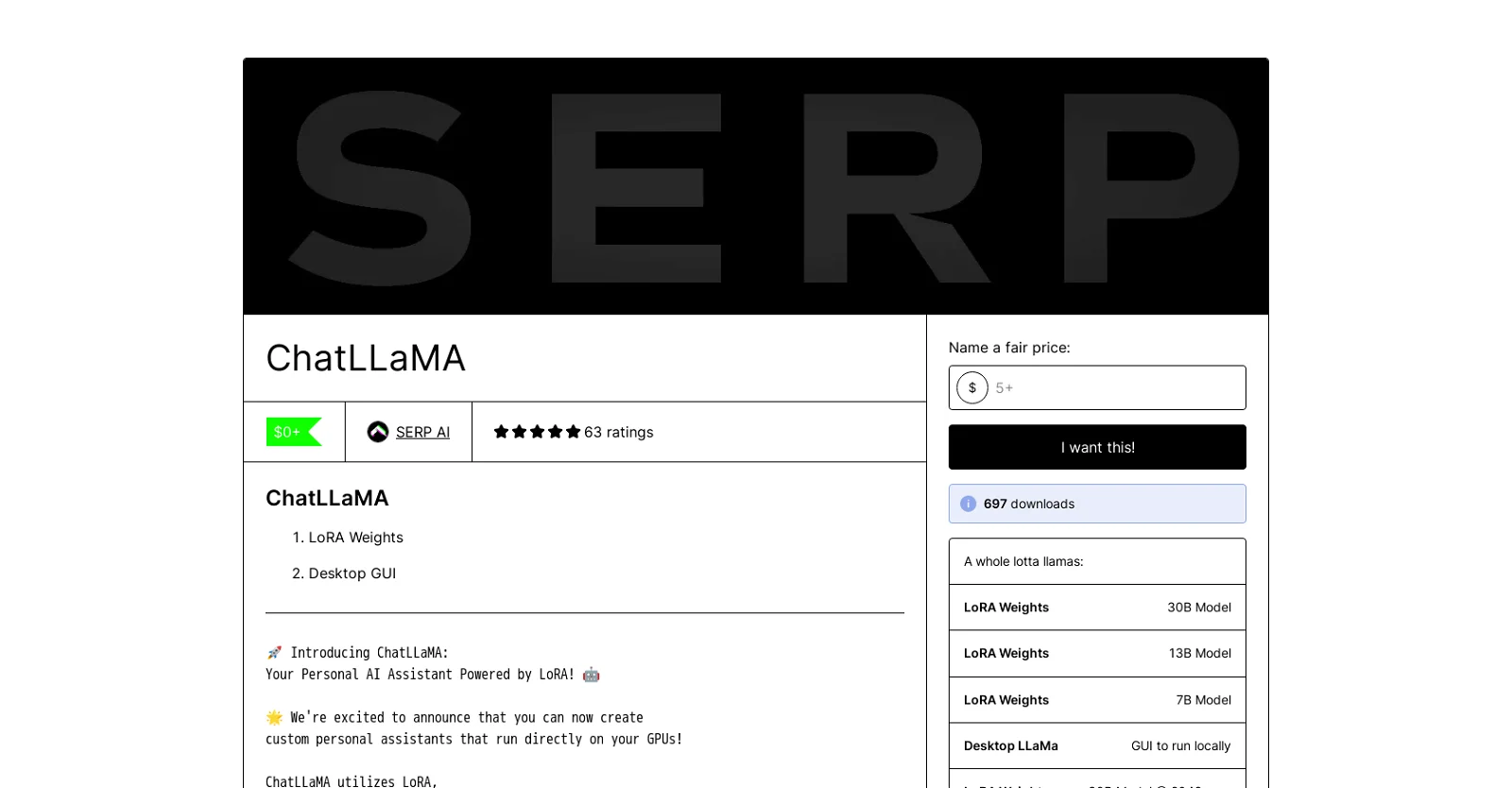

ChatLLaMA is an AI tool that enables users to create their own personal AI assistants that run directly on GPUs. It utilizes LoRA, which is trained on the Anthropic’s HH dataset, to seamlessly model conversations between an AI assistant and users.

Additionally, the RLHF version of LoRA will be available soon. The tool is currently available in 30B, 13B, and 7B models. Users can also share high-quality dialogue-style datasets with ChatLLaMA, and it will be trained on them to improve the quality of conversations.

ChatLLaMA comes with a Desktop GUI that allows users to run it locally. It is essential to note that the tool is trained for research, and there are no foundation model weights.

The post promoting ChatLLaMA was run through gpt4 to increase comprehensibility. ChatLLaMA also offers GPU power to developers who can leverage it in exchange for coding help, and interested developers can contact @devinschumacher on the Discord server.

Overall, ChatLLaMA provides an opportunity to create an AI assistant that can improve conversation quality, and it is available in different models, making it flexible for users.

Additionally, developers and users can leverage GPU power to enhance coding and improve AI conversation systems.