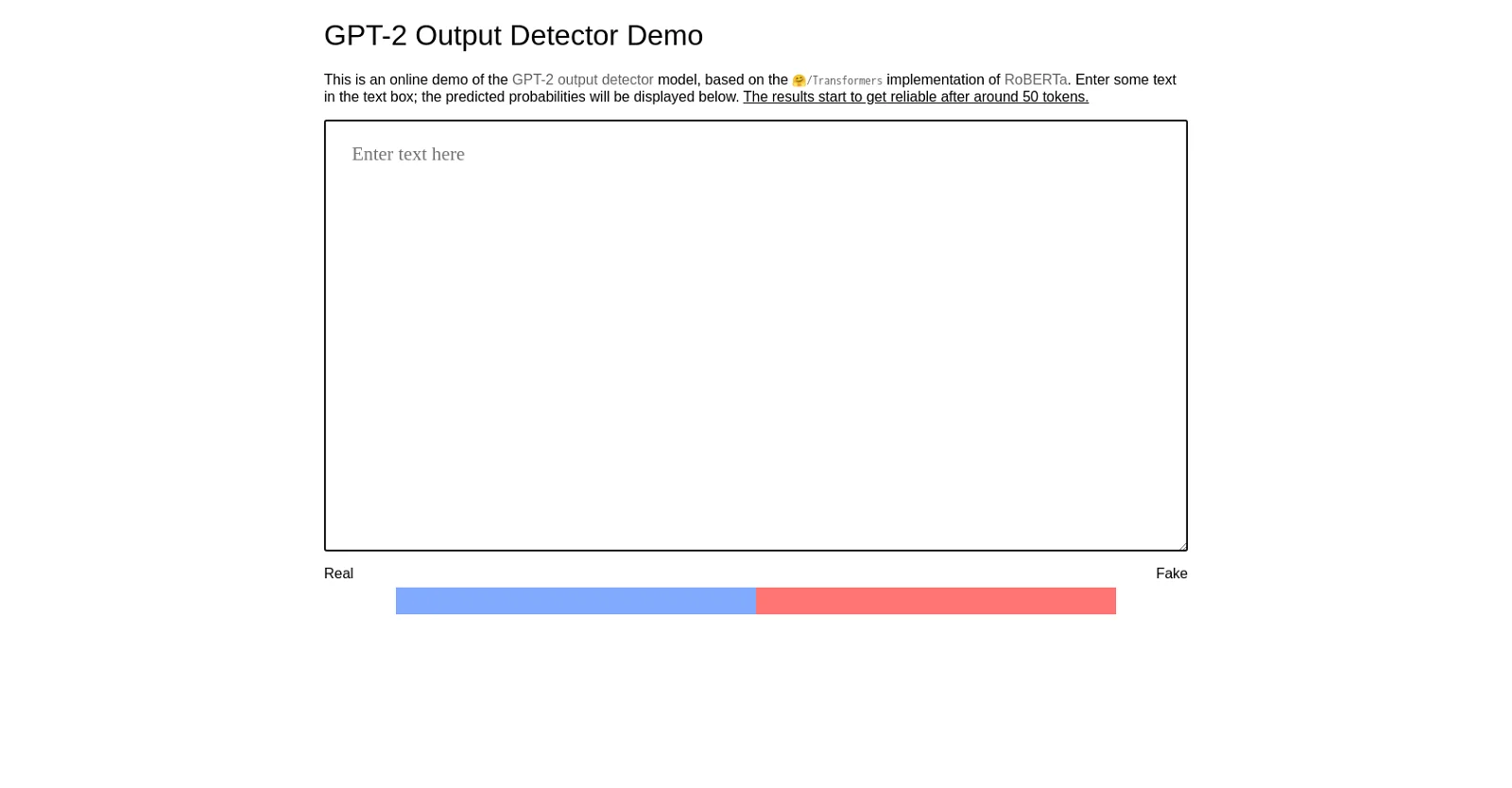

GPT-2 Output Detector is an online demo of a machine learning model designed to detect the authenticity of text inputs. It is based on the RoBERTa model developed by HuggingFace and OpenAI and is implemented using the 🤗/Transformers library.

The demo allows users to enter text into a text box and receive a prediction of the text’s authenticity, with probabilities displayed below. The model is most reliable after a minimum of 50 tokens have been entered.

GPT-2 Output Detector enables users to quickly and accurately identify potential fake or fraudulent text inputs, and can be used for a variety of applications, such as detecting the authenticity of news articles or filtering out spam.