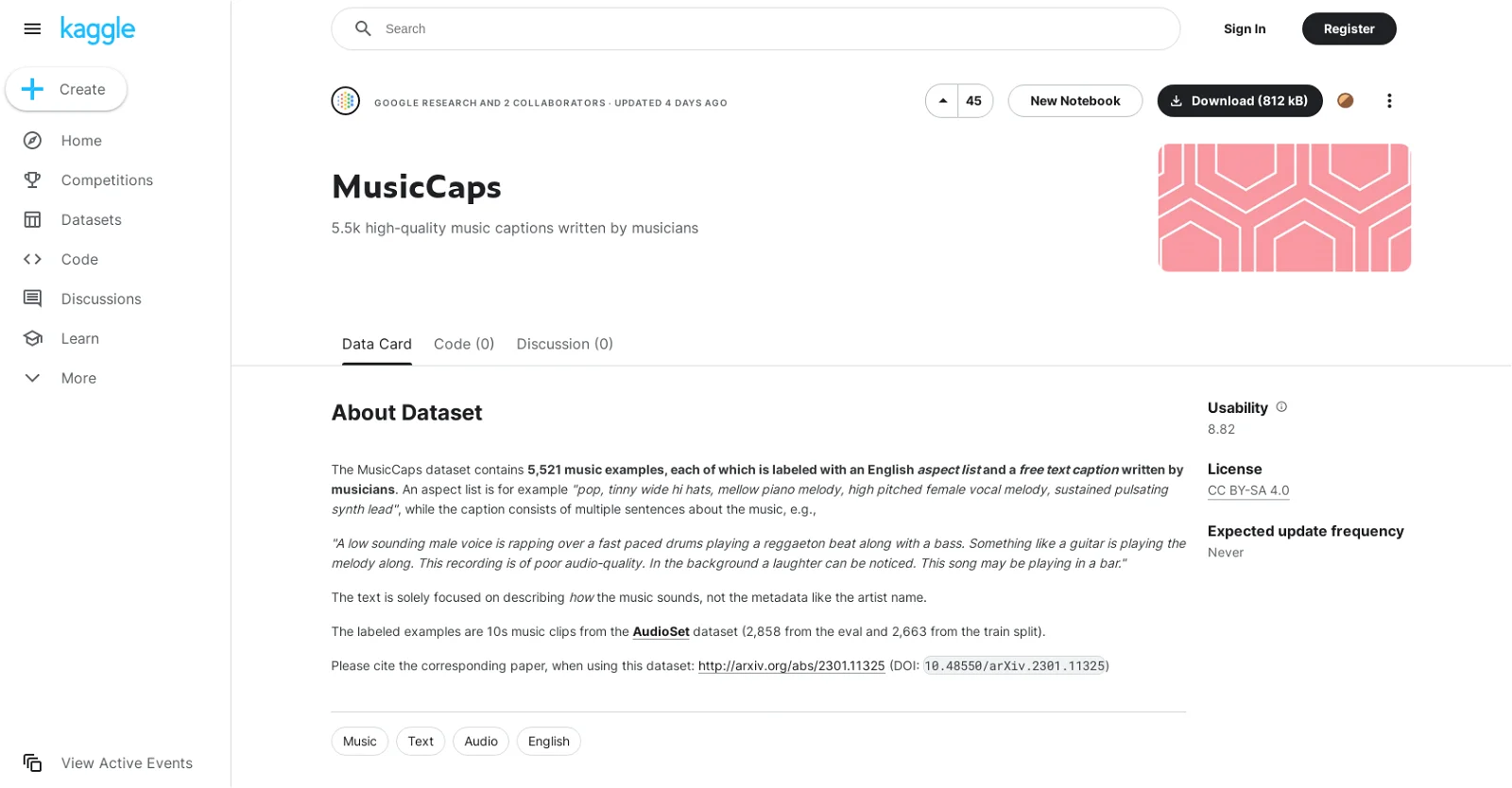

MusicCaps is a dataset of 5,521 music clips of 10 seconds each, labeled with an aspect list and a free-text caption written by musicians. An aspect list is a list of adjectives that describe how the music sounds, such as “pop, tinny wide hi hats, mellow piano melody, high pitched female vocal melody, sustained pulsating synth lead”.

The free-text caption is a description of how the music sounds, including details like instruments and mood. MusicCaps is sourced from the AudioSet dataset and is divided into an eval and train split.

The dataset is licensed with a Creative Commons BY-SA 4.0 license. Each clip is labeled with metadata such as YT ID (pointing to the YouTube video in which the labeled music segment appears), start and end position in the video, labels from the AudioSet dataset, aspect list, caption, author ID (for grouping samples by who wrote them), is balanced subset, and is AudioSet eval split.

The dataset is intended to be used for music description tasks.